I managed to do more work importing Last.fm data. Earlier I posted how nothing was working. I mentioned how there is no method to do this. I also mentioned how some of the migration methods available. Well below are the instructions on how to actually accomplish this. Dealing with the methods listed online, these were the only steps I found to merge two or more last.fm accounts.

There are some caveats. I was not able to get all of my tracks down from the server. Most of this is because of bad metadata. Out of my 23k submitted tracks, I only managed to get about 20k of them downloaded. In the re-upload, I lost another 6k.

The reason for the loss is two-fold. I think the script has an issue with Kanji characters and skips them. This removed a few video game soundtracks from my track count. Other scripts I have found just died when they reached the bad metadata. Since this is the most data I can get, it is better than nothing.

You will also lose the last playtime. If this is important to you then I can’t help you. Last.fm will not accept tracks with a play date of older than fourteen days. The API does not have a way around that restriction that I have seen. I did find a post that mentioned someone created a script that would rescrobble all of your old music and submit it in a manner that new accounts are able to perform, but he never released publicly.

Let’s move to how to do this (as a side, I performed this on OSX – but this should easily work on Linux or Windows):

Requirements:

- A last.fm account

- Python installed

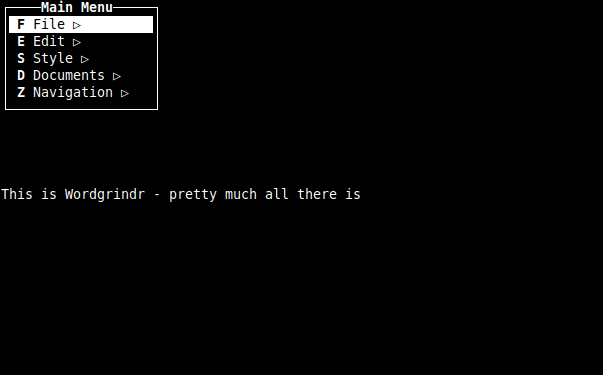

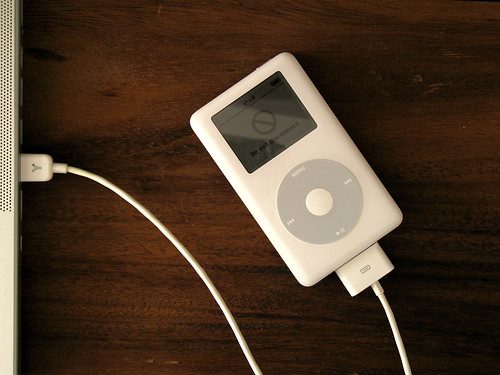

- Qtscrob

- A spreadsheet package

- A text editor

1. Go and download the lastexport.py script from this page

2. Run the command “python ./lastexport.py –user USERNAME” and replace USERNAME with your account (or any other account) you want to export.

3. When the script completes it will create a file named exported_tracks.txt, and rename the extension to .csv so you can easily open it in your spreadsheet. This file is tab-delimited.

4. You should now have a spreadsheet opened with all the data nicely arranged in columns. The first column is the timestamp. The second column is the track name. The third column is the artist’s name. The fourth column is the album name. The fifth column is the MusicBrainz artist ID. The last Column is the MusicBrainz song ID.

You will now need to rearrange these columns in the following order:

- artist name - album name (optional) - track name - track position on album (optional) - song duration in seconds - rating (L if listened at least 50% or S if skipped) - unix timestamp when song started playing - MusicBrainz Track ID (optional)

For the track position column, I just auto-filled 1’s in all the cells down the sheet. For the song duration column, I filled in 180 (3 minutes) in all the cells down the sheet. For the rating columns, I filled in L in all the cells down the sheet.

The Unix timestamp is a tricky one. If any tracks on your list are older than fourteen days old you will need to change the time stamp or last.fm will ignore those submissions.

Previously I had a friend generate a list of Unix times 180 seconds apart. I copied this in, but realized my 20k tracks took time back older than fourteen days. I took the latest 2k times and just copy and pasted those 10 times down the list. To find the latest Unix time and understand the time stamps go to this site.

At this point you should have all the columns filled out in the correct order to create a .scrobbler.log file. The website everyone points to for the .scrobbler.log file format details seems to be down when I was doing this. Here is a link to the page in the Internet Archive.

I do have a theory with this method that all the tracks can have the same time stamp. One of the earlier methods I tried did not allow that, so if you don’t want to figure out a bunch of random time stamps it is something you can test with. The difference in this article is how QTscrob is going to submit these tracks compared to how Libre.fm did in my other article.

5. Select all the data in the spreadsheet and copy and paste it straight into a text editor. You are going to add the following lines to the very top of the new file you created:

#AUDIOSCROBBLER/1.0 #TZ/UTC #CLIENT/Rockbox h3xx 1.1

These lines are required for the file to be recognized as a .scrobbler.log file.

6. You can now save the file. You must choose a Unix format or a format that will add hard breaks at the end of each line. I believe this is what caused the file to fail on me earlier. If your editor allows it (mine did not) you can save the file name as .scrobbler.log (remember the “.” at the beginning of the file name.

7. If your text editor did not allow to save the file name as .scrobbler.log you must rename your file to this at the command line or terminal level.

8. Start up QTscrob and select open .scrobbler.log. Browse to the directory where the file is. It is only looking for the containing directory so you will not be opening the file name directly.

9. At this point (if you have done everything correctly and I’m not going to troubleshoot files if you did not) you should see a list of tracks in the QTscrob interface. If everything looks good just click submit. This may take a few minutes depending on how many tracks you have.

In theory, someone could easily change the lastexport.py script so that it directly creates the .scrobbler.log file. If you are interested in doing this, you would have to change the field order, add the extra columns, and the header in the manner described in steps four and five.

At different points, I have spent days trying to find a good way to do this. With the bad metadata most archiving scripts just failed me, so I’m quite happy with the lastexport.py script.

I couldn’t find a single place online that told you directly how to make a .scrobbler.log manually. All the sites seemed to assume that you must only be using software to do this and the programmers would only look at the file specifications. I could not even find an example file to compare to. If the Internet Archive had not mirrored the site with the file specifications I never would have gotten this far.

If this post has been useful to you, just please drop a note and let me know.